You need to take all the analytics that go into application performance into account. To ensure that your application response-times remain low, and CPU doesn’t get throttled, you need to first understand that when CPU throttling is occurring you can’t just look at CPU utilization. This is great news for you, as you can get this metric directly from Kubernetes and OpenShift. How Do You Avoid CPU Throttling in Kubernetes?ĬPU throttling is a key application performance metric due to the direct correlation between response-time and CPU throttling. Your applications performance will suffer due to the increase in response time caused by throttling. If your task is longer than 20ms, you will be throttled and it will take you 4x longer to complete the task. The container is only able to use 20ms of CPU at a time because the default enforcement period is only 100ms. To bring some color to this, imagine you set a CPU limit of 200ms and that limit is translated to a cgroup quota in the underlying Linux system. And the high response times are directly correlated to periods of high CPU throttling, and this is exactly how Kubernetes was designed to work. Even if you have more than enough resources on your underlying node, you container workload will still be throttled because it was not configured properly.

So what’s going on here? CPU throttling occurs when you configure a CPU limit on a container, which can invertedly slow your applications response-time.

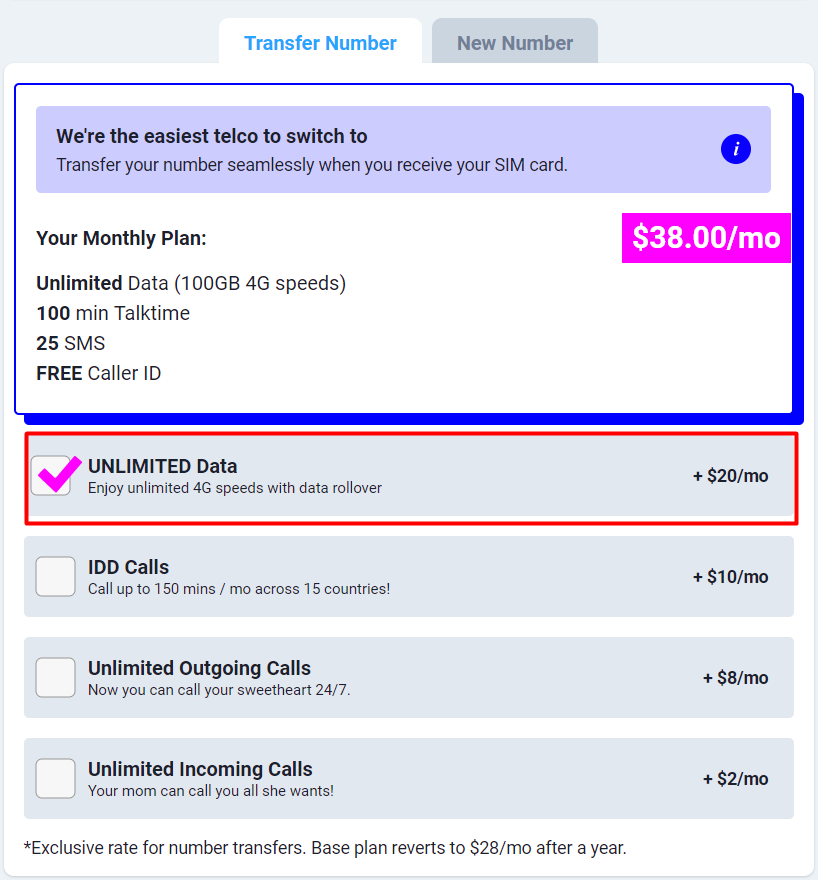

In the above figure, the CPU utilization of a container is only 25%, which makes it a natural candidate to resize down.įigure 2: Huge spike on Response Time after Resize to ~50% CPU utilisationīut after we resize down the container (container CPU utilization is now 50%, still not high), the response time quadrupled!!!

0 kommentar(er)

0 kommentar(er)